Incremental Learning in Diagonal Linear Networks

Updated: 2023-09-30 20:28:19

Diagonal linear networks (DLNs) are a toy simplification of artificial neural networks; they consist in a quadratic reparametrization of linear regression inducing a sparse implicit regularization. In this paper, we describe the trajectory of the gradient flow of DLNs in the limit of small initialization. We show that incremental learning is effectively performed in the limit: coordinates are successively activated, while the iterate is the minimizer of the loss constrained to have support on the active coordinates only. This shows that the sparse implicit regularization of DLNs decreases with time. This work is restricted to the underparametrized regime with anti-correlated features for technical reasons.

, Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only Visualization Tools and Learning Resources , September 2023 Roundup September 28, 2023 Topic The Process roundup Welcome to The Process where we look closer at how the charts get made . This is issue 258. I’m Nathan Yau . Every month I collect tools and resources to help you make better charts . Here’s the good stuff for . September To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours worth of step-by-step

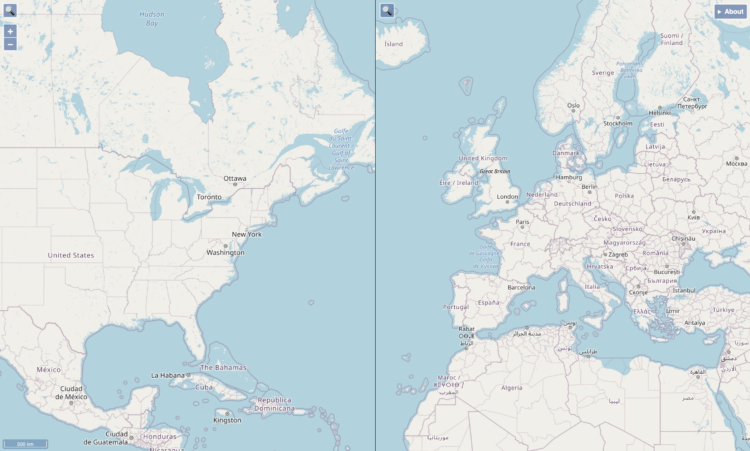

, Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only Visualization Tools and Learning Resources , September 2023 Roundup September 28, 2023 Topic The Process roundup Welcome to The Process where we look closer at how the charts get made . This is issue 258. I’m Nathan Yau . Every month I collect tools and resources to help you make better charts . Here’s the good stuff for . September To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours worth of step-by-step Membership Courses Tutorials Projects Newsletter Become a Member Log in Two maps with the same scale September 26, 2023 Topic Maps Josh Horowitz scale When you compare two areas on a single map , it can be a challenge to compare the actual size of them because of the trade-offs with projecting a three-dimensional space onto a two-dimensional space . Josh Horowitz made a thing that automatically rescales side-by-side maps as you pan and zoom , so that you get a more accurate . comparison Related Scale of Ukrainian cities Areas still controlled by Ukraine Vintage relief maps Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All Literacy Scores by Country , in Reading , Math , and Science See how your country . compares How We

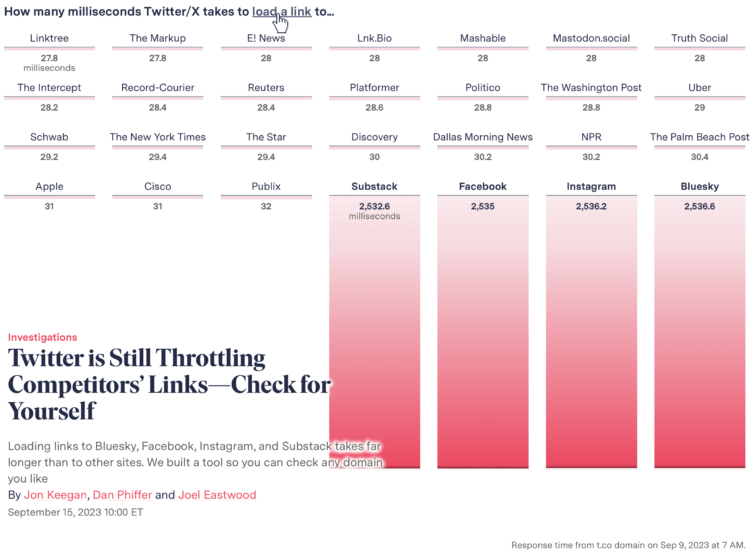

Membership Courses Tutorials Projects Newsletter Become a Member Log in Two maps with the same scale September 26, 2023 Topic Maps Josh Horowitz scale When you compare two areas on a single map , it can be a challenge to compare the actual size of them because of the trade-offs with projecting a three-dimensional space onto a two-dimensional space . Josh Horowitz made a thing that automatically rescales side-by-side maps as you pan and zoom , so that you get a more accurate . comparison Related Scale of Ukrainian cities Areas still controlled by Ukraine Vintage relief maps Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All Literacy Scores by Country , in Reading , Math , and Science See how your country . compares How We Membership Courses Tutorials Projects Newsletter Become a Member Log in Twitter slows competitor links September 22, 2023 Topic Infographics slow The Markup Twitter When you click a link on Twitter , you go through a Twitter shortlink first and then to the place you want to go . When you click on a link that points to one of Twitter’s competitors , by complete coincidence I am sure , there’s a delay . For The Markup , Jon Keegan , Dan Phiffer and Joel Eastwood ran the tests You can also try it with your own . URLs I’m into the animated opening . graphic Related Oddly specific ad profiles Who pays for Twitter Unregulated location data industry Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All How Many Kids We Have and When

Membership Courses Tutorials Projects Newsletter Become a Member Log in Twitter slows competitor links September 22, 2023 Topic Infographics slow The Markup Twitter When you click a link on Twitter , you go through a Twitter shortlink first and then to the place you want to go . When you click on a link that points to one of Twitter’s competitors , by complete coincidence I am sure , there’s a delay . For The Markup , Jon Keegan , Dan Phiffer and Joel Eastwood ran the tests You can also try it with your own . URLs I’m into the animated opening . graphic Related Oddly specific ad profiles Who pays for Twitter Unregulated location data industry Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All How Many Kids We Have and When Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only Calming Data September 21, 2023 Topic The Process calm feeling insight Welcome to The Process where we look closer at how the charts get made . This is issue 257. I’m Nathan Yau . We make charts for a lot of reasons : getting status updates , finding trends , evaluating accuracy , testing hypotheses , decorating , and proving points . For me , chart-making provides a perspective that’s beyond my own , which can be . calming To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on

Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only Calming Data September 21, 2023 Topic The Process calm feeling insight Welcome to The Process where we look closer at how the charts get made . This is issue 257. I’m Nathan Yau . We make charts for a lot of reasons : getting status updates , finding trends , evaluating accuracy , testing hypotheses , decorating , and proving points . For me , chart-making provides a perspective that’s beyond my own , which can be . calming To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on